views

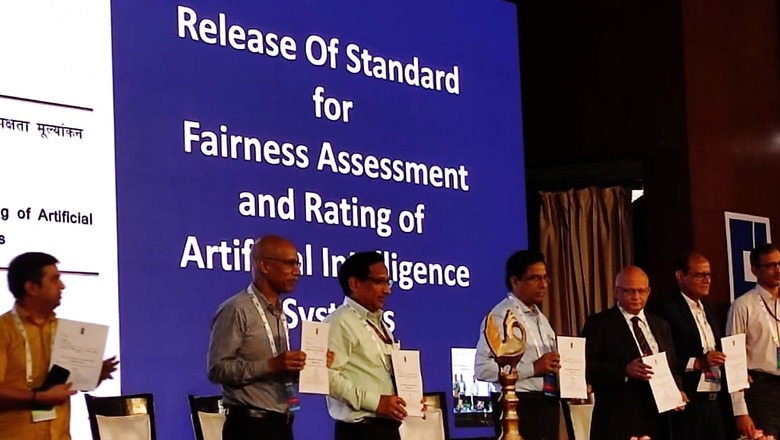

Telecommunication Engineering Centre, a government agency under the department of telecommunications, has released a standard for fairness assessment and rating of artificial intelligence systems.

While the draft came in December last year, the standard was officially released on Friday during an event at the Centre for Development of Telematics (C-DOT) in New Delhi.

E-governance is increasingly using AI systems but these can be biased, leading to ethical, social and legal issues. Bias refers to a systematic error in a machine learning model that causes it to make unfair or discriminatory predictions.

So, the standard released by TEC provides a framework for assessing the fairness of AI systems. It includes a set of procedures that can be used to identify potential biases and a set of metrics that can be used to measure the fairness of an AI system.

The standard can be used by governments, businesses and nonprofits to demonstrate their commitment to fairness. It can also be used by individuals to assess the fairness of the AI systems that they are using. Further, the standard is based on the ‘Principles of Responsible AI’ laid out by NITI Aayog, which include equality, inclusivity and non-discrimination.

Step by step approach

Artificial intelligence is increasingly being used in all domains including telecom, as well as related information and communications technology for making decisions that may affect day-to-day lives. Since unintended bias in the AI systems could have grave consequences, this standard provides a systemic approach to certifying fairness.

It approaches certification through a three-step process involving bias risk assessment, threshold determination for metrics and bias testing, where the system is tested in different scenarios to ensure that it performs equally well for all individuals.

There are different data modalities, including tabular, text, image, video, audio among others. In simpler words, data modality refers to the type of data that is being used to train an AI system. For example, tabular data is data organised in a table, text data is data represented as text, image data is data represented as images, and so on.

The procedure for detecting biases may be different for different data types. For example, a common form of discrimination in text data is due to the encoding of the text input. This means that the way that the text is represented in the computer may be biased, which can lead to the AI system making biased predictions.

So, at present, the standard is built for tabular data and intended to be expanded to other forms. It can be used in two ways, self-certification and independent certification.

What is self-certification?

This is when the entity that developed the AI system conducts an internal assessment of the system to see if it meets the requirements of the standard. If it does, the entity can then provide a report that says the system is fair.

What is independent certification?

This is when an external auditor conducts an assessment of the AI system to see if it meets the requirements of the standard. If it does, the auditor can then provide a report that says the system is unbiased.

Comments

0 comment